Takeaways from the first AI-Enabled Policymaking Project (AIPP) workshop

Co-hosted with RAND Corporation, the Tony Blair Institute for Global Change, the Stimson Center, and Tyler Cowen’s Emergent Ventures

The gap between technological change and institutional response is widening, and AI forcing that tension into the open.

Governments face mounting pressure to act quickly and regulate responsibly without sacrificing deliberation, transparency, and trust. But meeting this moment requires requires new tools, workflows, and the imagination to rethink how democratic institutions should fundamentally operate.

On May 15, 2025, the AI-Enabled Policymaking Project, in partnership with RAND Corporation, the Tony Blair Institute for Global Change, Stimson Center, and Emergent Ventures at the Mercatus Center, convened 80 leaders in Washington D.C. from government, civil society, and industry to explore how AI might become part of the governance stack.

A full workshop report will be published by RAND later this summer. These are our preliminary takeaways.

“Science gathers knowledge faster than society gathers wisdom.” - Isaac Asimov

Why we built AIPP

This work emerged from lessons learned in 2024, when I was deeply engaged in California’s AI policy debate for SB 1047, the Safe and Secure Innovation for Frontier AI Models Act. This ambitious and widely debated bill would have reshaped the regulatory landscape for frontier models.

But I was struck by how overwhelmed even the most capable policy teams were. Staffers struggled to access technical expertise, anticipate downstream implications, or quantify impact for affected stakeholders. This experience prompted a deeper question: What if AI could actually help governments govern?

This idea became the seed for the AI-Enabled Policymaking Project, which I co-founded with Galen Hines-Pierce at the Stimson Center.

AIPP builds on a growing set of global examples where AI is being used in policymaking:

In 2020, the U.S. state of Ohio began using AI to revise its administrative law

In 2023, Porto Alegre, Brazil, passed the first known law co-written by AI

In 2024, 51% of U.S. federal, state, and local government employees reported using AI tools weekly or daily (Ernst & Young)

In 2025, the UAE formally announced that AI would help write laws while the U.S. Federal and Drug Administation approved a rollout of AI across all centers

As Foreign Policy put it last month, AI has the potential to improve deliberation and pluralism in policymaking.

Key takeaways

The workshop focused on practical use cases and prototypes across the full policymaking lifecycle, from problem identification and stakeholder engagement to implementation and oversight.

Breakout sessions explored:

How public servants could use LLMs to process constituent communications or stakeholder input at scale

Whether simulation-based compromise scenarios might help overcome political gridlock

How asynchronous deliberation platforms might surface insight and dissent more effectively than traditional hearings

What it would take to forecast regulatory bottlenecks (like ITAR compliance) while policies are being drafted

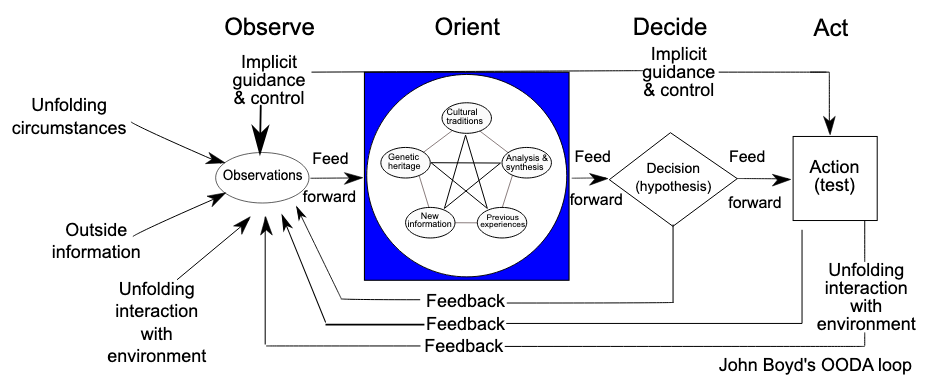

Much of the conversation focused on how AI tools could make policymaking ‘better, faster, or cheaper’ through the OODA loop: Drafting testimony, summarizing public input, translating policy for different audiences, etc.

But underneath was a more fundamental idea that we’ll continue to explore:

AI will accelerate policy workflows but also reshape governance itself, which demands new institutions, norms, and models of democratic legitimacy.

Where AI is being used today

We heard from startups with tools in production and government teams already experimenting with AI systems inside their workflows.

Some government employees are using LLMs as “practice partners” to refine arguments, simulate policy outcomes, or translate between stakeholder groups. Others are testing CRM-style knowledge platforms to track all historic legislative positions, sentiment, or past agency actions.

Current gaps

The widening gap between public institutions’ ability to observe, orient, decide and act, and the speed and clarity of the institutions they aim to govern necessitates a fundamental rethinking — both how AI can improve existing processes and force the evolution of our institutions over the long term.

First, most public servants are engaging in shallow, isolated use of AI — cobbling together simple Copilot or ChatGPT queries without robust institutional support or clear authorization. The result is fragmented adoption, not a fully leveraged capability.

Second, AI tools risk flattening nuance in public engagement. Without intentional safeguards, deliberative processes may over-index on majority consensus and marginalize dissenting or less-visible voices.

Third, there’s a growing question of democratic legitimacy. If AI begins to displace consultants, researchers, and analysts, who ensures deliberation, accountability, and public trust?

Next steps

AIPP is focused on two tracks:

1. Applied

We’re scoping and testing tools for real users — staffers, ministers, analysts, and frontline public servants. That includes workflows like constituent synthesis, draft translation, impact modeling, and early-warning signals for regulatory blockers.

2. Institutional

We’re developing common protocols, safe experimentation spaces, and shared standards to guide responsible use. We see AIPP as its own translation layer bringing stakeholders together to build the next layer of dynamic public governance infrastructure.

A full workshop report will be published by RAND later this summer. In the meantime, we’re planning follow-up events focused on the deeper democratic implications: representation, legitimacy, and institutional resilience in an AI-mediated world.

You can learn more about this work from our partners:

Tony Blair Institute for Global Change - Governing in the Age of AI: A New Model to Transform the State (May 2024)

Stimson Center - Hybrid AI and Human Red Teams: Critical to Preventing Policies from Exploitation from Adversaries (March 2025)

If AI is reshaping how we govern, we need more institutions willing to meet that shift head-on with intention and collaboration.

Join us

If you’re working at the intersection of artificial intelligence and public decision-making, we hope you’ll join us.

📧 ai4policy@rand.org

📎 https://www.ai4policy.org/

Many thanks to Jeff Alstott, Casey Dugan, Dulani Woods, Galen-Hines Pierce, Sam Mor, Jakob Mökander, Keegan McBride, Rebecca Poole, David Milestone, Marie Teo, Rachel Chapman, Kamalei Lee, and Tyler Cowen, and everyone at RAND’s Technology and Security Policy Center and the Tony Blair Institute for Global Change Washington D.C. office.

This is an interesting scan of the landscape of AI as used in policy. The "practice partner" use case is one of the best uses of current AI, and I wish it were adopted in more corporate and legal applications.

Somewhat aligned, I've written about using AI to improve government transparency, and thus decision making. In particular, it has become even more critical that we make the outputs of government deliberation machine readable, and consistent. Happy to provide more info on that if it's of interest. This might be a critical step to unlocking really world-changing capabilities in policy development, because it can provide an accurate map of practice as balanced against the rules.